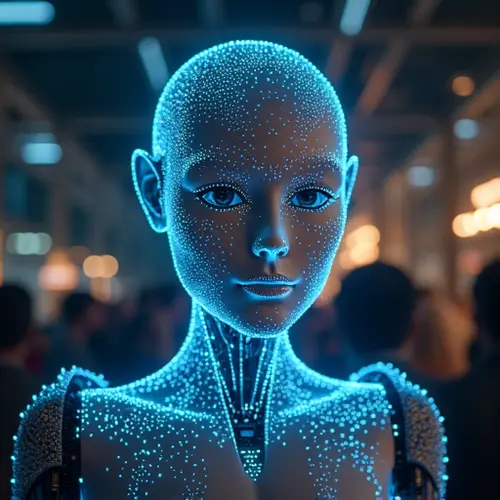

The Rise of Emotional AI

Robots are learning to understand and mimic human emotions like never before. Thanks to breakthroughs in emotional AI (also called affective computing), machines can now recognize facial expressions, interpret tone of voice, and respond with simulated empathy. This isn't science fiction - companies like Realbotix are already deploying companion robots like Aria that adapt their behavior based on your mood.

How Emotional Robots Work

These robots combine cameras, microphones, and sensors to analyze human signals. They track everything from subtle facial twitches to changes in heart rate. Advanced algorithms then match this data to emotional states. As Realbotix demonstrated on Good Morning Britain earlier this year, their robots use this tech to hold natural conversations that feel surprisingly genuine.

Real-World Applications

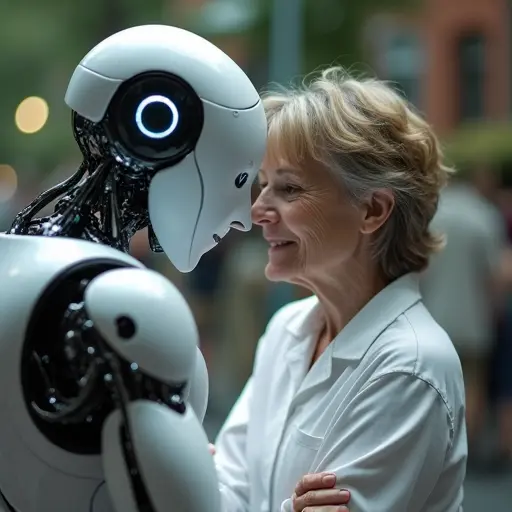

Beyond companionship, emotional AI is transforming healthcare and therapy. Robots with synthetic emotions assist dementia patients, help children with autism develop social skills, and provide mental health support. Researchers note these machines excel at consistent, judgment-free interactions - something humans struggle with.

The Ethics of Synthetic Bonds

As robots become more emotionally intelligent, ethical questions emerge. Should machines simulate love or grief? What happens when humans form deep attachments? Experts worry about emotional dependency, especially in vulnerable populations. Dr. Lena Petrova, an AI ethics researcher, warns: "We're creating relationships that feel real but lack human reciprocity."

What's Next?

The field is advancing rapidly. New research in ScienceDirect highlights personalized entertainment robots that tailor interactions to individual users' emotional patterns. Future models may predict emotions before they're consciously felt. As one developer quipped: "Soon your robot might know you're stressed before you do."

Nederlands

Nederlands

English

English

Deutsch

Deutsch

Français

Français

Español

Español

Português

Português